AI's Achilles Heal - Copyright!

It could be that copyright laws will be the downfall of generative AI

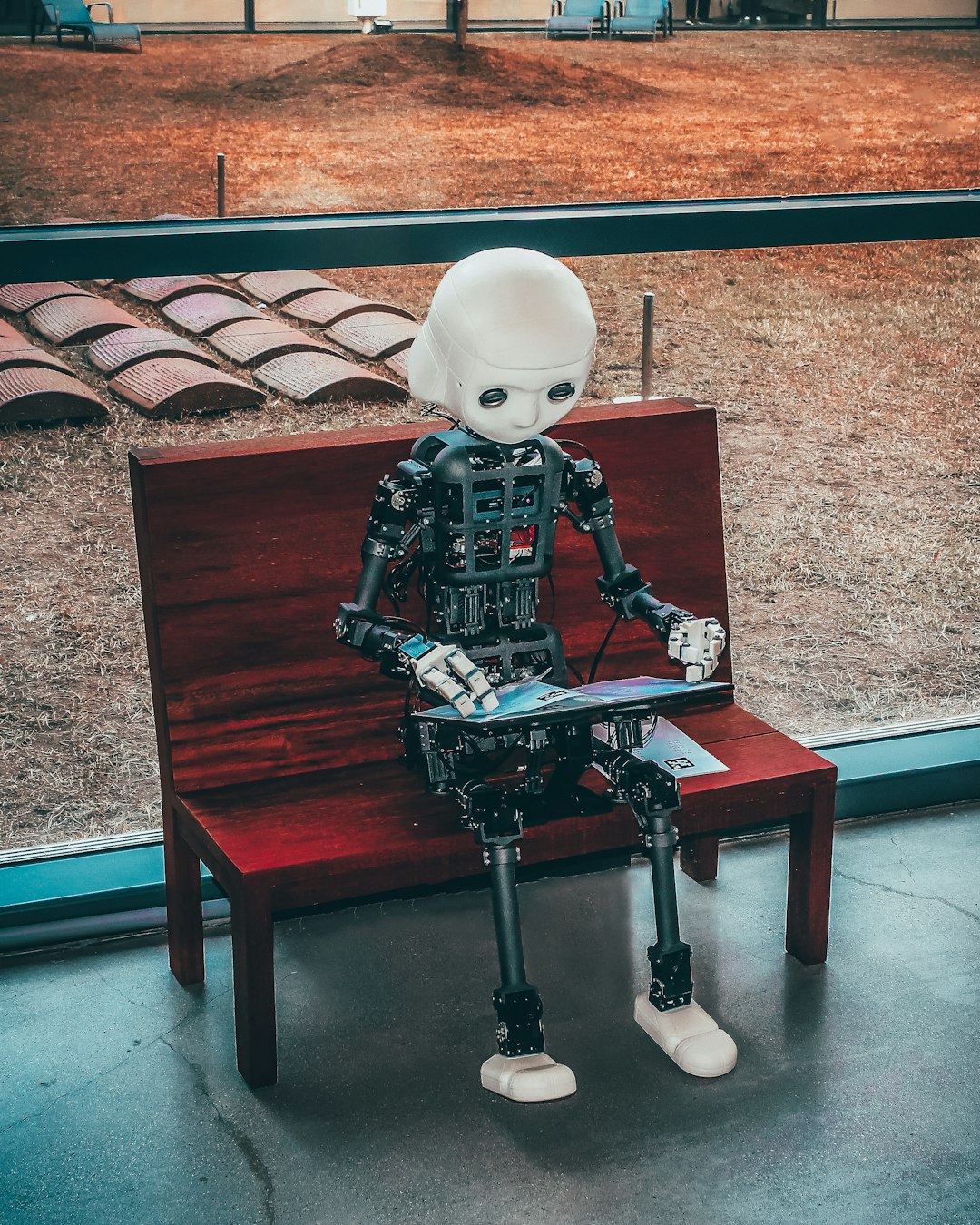

Generative AI can seem like magic or murder - copyright murder. Image generators such as Stable Diffusion, Midjourney, or DALL·E 2 can produce remarkable visuals in styles from aged photographs and water colours to pencil drawings and Pointillism. The resulting products can be fascinating as both quality and speed of creation are elevated compared to av…

Keep reading with a 7-day free trial

Subscribe to The Letts Journal to keep reading this post and get 7 days of free access to the full post archives.