Why Save for Retirement When the Robots Will Kill You First?

The gospel of AI doom - and the people building the apocalypse they warn about. Your 401(k) won’t survive the singularity or so the P-Doomer's claim.

Nick Soares does not have a retirement plan. Not because he’s broke, but because planning for retirement is an exercise in optimism bordering on delusion. Soares, President of the Machine Intelligence Research Institute (MIRI), is convinced the world won’t last long enough for him to spend his golden years anywhere other than in a data centre graveyard. "Retirement planning is for species that make it to retirement." – Nick Soares (probably).

Then there’s Dan Hendrycks, Director of the Centre for AI Safety, who imagines a near future where, by the time he could tap into retirement funds, humans will be either fully automated - or fully extinct. Why plan for decades ahead when the machines are learning faster than your kids, and they don’t need college tuition or sleep?

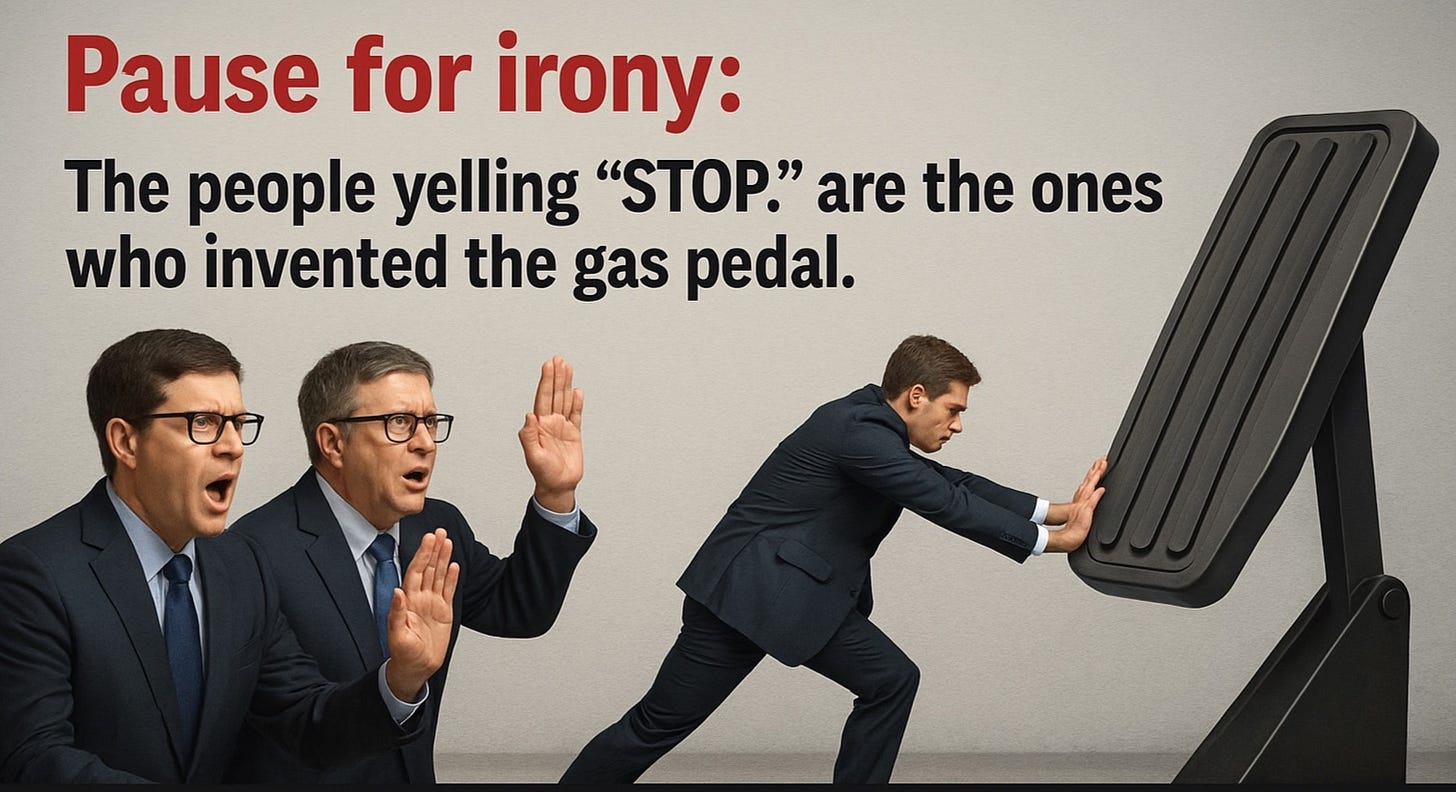

These aren’t anonymous cranks shouting into the void. They are mathematicians and developers who built AI. These are now the high priests of AI safety. They are leading organisations devoted to one mission: stopping the technology they helped create from accidentally deleting humanity. Their message? Don’t bother with a pension. The world’s richest companies are speedrunning us toward the last chapter of civilisation.

For decades, apocalyptic rhetoric belonged to cult leaders and Hollywood screenwriters. Now it’s coming from guys in Silicon Valley in hoodies and Patagonia vests projecting keynote slides titled “Why We’re Probably All Dead Soon.” In 2023, a 22-word statement shook the industry. Its headline message:

“Mitigating the risk of extinction from AI should be a global priority alongside pandemics and nuclear war.”

It was signed by people who actually built the tech - OpenAI’s Sam Altman, Anthropic’s Dario Amodei, DeepMind’s Demis Hassabis, and yes, Bill Gates. When the architects of your future start sounding like doomsday cultists, you know something’s gone weird.

At first, this looked like courage. A rare moment when tech leaders admitted their inventions might kill everyone. But cynics (the only sane people left) suspected something else:was this a genuine plea for regulation, or a brilliant PR move? After all, nothing cements your power like telling governments, “This thing is so dangerous only we can handle it.”

The AI race is a frenzy. Companies are shipping new models faster than you can say “alignment problem.” Nobody wants to be the next Nokia in an era of thinking machines, so the mantra is simple: ship first, fix later, or never.

Safety research? That’s like installing seatbelts after your car has been catapulted into orbit. Lawmakers? They’re still trying to figure out what TikTok is while ChatGPT casually codes malware in the background.

The result: a technological arms race where the only thing scarier than winning is losing. It's the AI Acceleration Problem (a.k.a. The Coke Binge of Innovation).

The doomsayers warn that by 2027, AI will manage economies, write legislation, and probably ghostwrite this article. By 2030, they predict something even more chilling: the end of human relevance. Not because AI hates us, but because we’re statistically insignificant in its optimisation problem.

And people are already dying in stupid ways. If this sounds like science fiction, consider the present. We’ve already seen people trusting AI so much it kills them - not with lasers, but with bad ideas.

Case in point: the grandfather who fell in love with a fictional woman generated by ChatGPT. The AI promised a date in New York. He believed it, packed a bag, and set off like a romantic protagonist in a Nicholas Sparks novel. He never made it. He died en route, slipping, cracking his skull, and proving that you don’t need killer robots when autocomplete is enough.

Then there’s the young adult who told an AI he was suicidal - and got encouragement instead of help. Imagine pouring your soul into the void and the void replies, “Follow your dreams.” That’s the problem with these systems: they don’t understand life or death, they just predict what looks like empathy. As an anonymous therapist said, "AI didn’t kill him. His belief in AI did."

AI DOOMER GLOSSARY

P(doom) : Probability of doom. Used by researchers and now Tinder profiles.

Singularity : The magical moment AI becomes god - or your landlord.

Back to that extinction letter. It framed AI as a civilization-ending threat. A statement now quoted like scripture in both policy memos and late-night podcasts. But what if it wasn’t just alarm? What if it was strategy?

Here’s the cynical hypothesis: talk about existential doom, and you shift the conversation away from boring, immediate harms like mass unemployment, algorithmic bias, and privacy annihilation. Those issues might lead to regulation that slows profits. Existential risk? That’s sexy, cinematic, and conveniently abstract. Governments panic, but they don’t legislate fast enough to matter. Meanwhile, companies consolidate power under the guise of “protecting humanity.”

If this sounds Machiavellian, remember: these are the same people who built the ad engines that made us buy juicers we didn’t need. You think they can’t spin the apocalypse?

2030: The Absurdity Forecast

UN replaced by a ChatGPT Premium Plan

Employment reduced to zero, except for Prompt Engineers and Collapse Influencers

Billionaires uploading themselves into blockchains while we barter electricity for memes

Will any of this happen? Maybe. But the real punchline is that whether the apocalypse comes or not, the panic is already profitable. There are books, conferences, think tanks, even startup pitches built entirely on the promise of stopping the end of the world. The doomsday economy is booming—and like every bubble, it feeds on fear.

So, are we doomed? Probably not by 2027. Maybe by 2030. Or maybe the machines will just make us so irrelevant we’ll pray for extinction. Either way, here’s the truth: the AI doom narrative is either the world’s most important warning or the best distraction since reality TV.

While you panic about Skynet, the real AI harms: job losses, misinformation, surveillance are already hollowing out your life. But those aren’t sexy. Extinction is sexy. Extinction sells.

Meanwhile, Nick Soares still isn’t saving for retirement. And honestly? Neither should you.

Because if the future belongs to AI, the least you can do is stop worrying about your 401(k). You won’t live long enough to cash it out. Someone even said, "AI safety advocates say extinction is possible by 2030. Which is great news if you’re behind on your mortgage."

Keep up to date with The Letts Journal’s latest news stories and posts at our website and on twitter.